21 developers from nine different countries gathered in Bonn, Germany between June 20th and 24th to work on implementing the upcoming Plone 6. The sprint at the office of the kitconcept GmbH has been declared a “strategic sprint” by the Plone framework team. Sprint topics included working on Volto, the Plone REST API, and Guillotina.

Volto

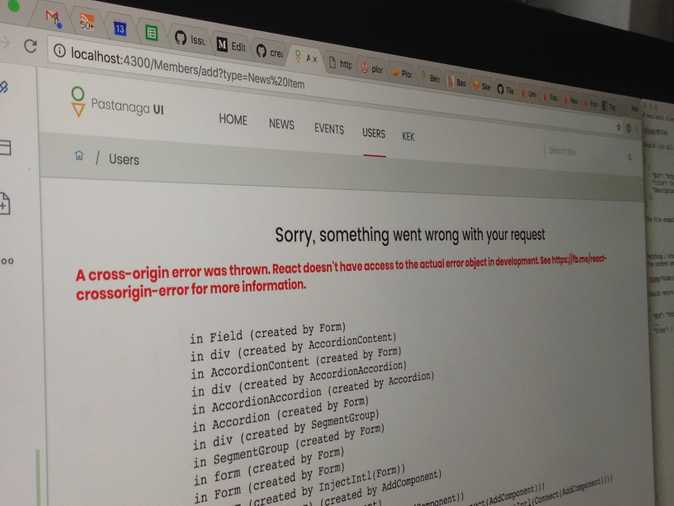

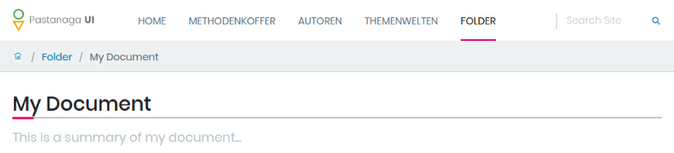

Volto is a new ReactJS-based frontend for Plone. It was started by Rob Gietema in 2017 and it is actively developed since then. Volto will become the default frontend for Plone 6. It implements a complete new user interface called Pastanaga UI, which was developed by Albert Casado.

Victor Fernandez de Alba and Rob Gietema led the efforts to further enhance Volto together with Paul Roeland, Nicola Zambello, Piero Nicolli, Janina Hard, Jakob Kahl, Thomas Kindermann, Maurizio Delmonte, Stefania Trabucchi, Rodrigo Ferreira de Souza, Fred van Dijk, and Steffen Lindner.

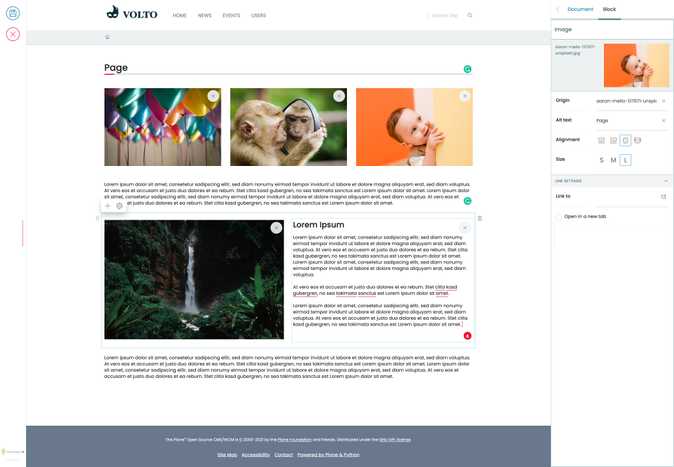

Pastanaga UI Toolbar

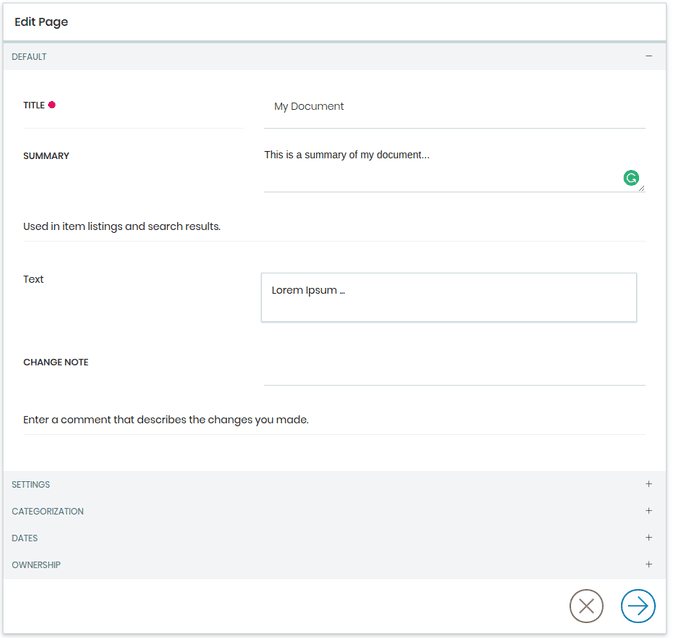

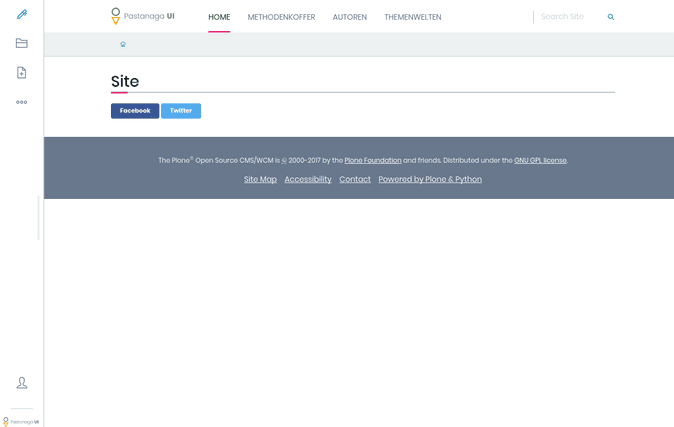

The new editing toolbar has been part of Volto right from the start. Though, so far we did not fully implement every detail that Albert has imagined for the Pastanaga UI toolbar. Victor has been working on this for quite a while already. During the sprint, he integrated his work and polished it. Victor and Rob also worked on a new sidebar that shows up on the right side of the Volto user interface that holds additional information like page metadata and controls the settings of the individual tiles.

The new Volto edit toolbar

The new Volto edit toolbar

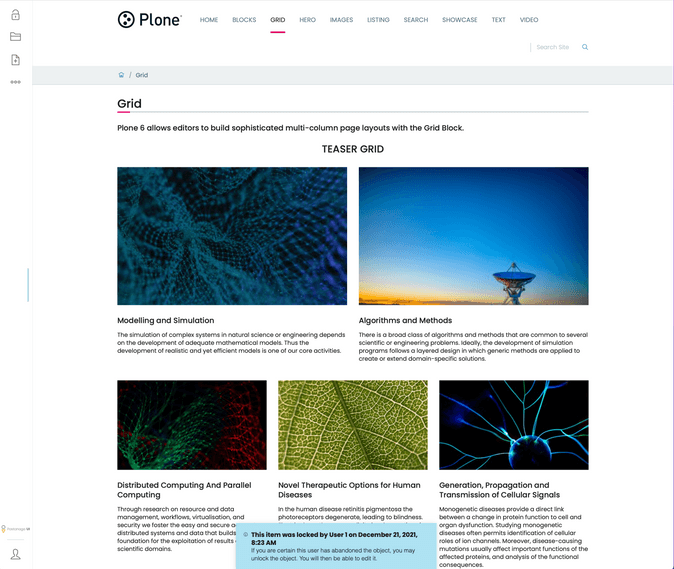

New Tiles: Collection, Slider and Table

At the Plone conference in Tokyo, we came up with a list of tiles for the new Volto composite page editor that we would like to implement. On top of that list were the listing/collection tile, the slider tile and the table tile. Piero, Rodrigo, and Rob started to work on the listing/collection tile that is supposed to become a replacement for the Collection Type in Plone.

Jakob and Janina worked on copying over the code of a slider tile that was developed by kitconcept. Rob implemented the table tile. Before you could ask Rob about the progress, he already finished his work, like always…

The new Volto table tile

The new Volto table tile

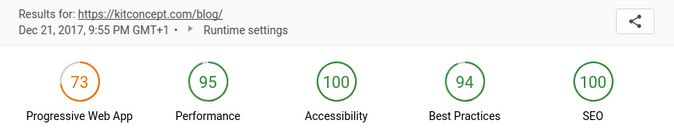

Accessibility

Accessibility has always been a first class citizen in the Plone community. Plone is used by many government and public websites where accessibility is a basic requirement. Paul, Thomas, Timo, and Victor worked on improving the accessibility of Volto. Our goal is to fully support the WCAG 2.1 standard with the “AA” level of conformance.

Timo set up ESlint-based static code analysis checks and Cypress-based axe accessibility tests. Paul, Thomas and Victor then started to fix the reported accessibility issues. At the end of the sprint, we were able to fix all the reported violations. Our CI build now fails if new accessibility violations are committed.

Documentation

Sven Strack, the Plone documentation team leader, joined our sprint remotely from Amsterdam to discuss how to maintain and structure the Volto documentation in the future. We all agreed that we will use Markdown as format for our docs, since this is the de-facto standard in the JavaScript community as well as in most other Open Source communities. As much as we like Restructured Text, it seems that Markdown won that battle.

We currently use MKdocs for the Volto documentation and Styleguidist to document our React components. There are lots of different tools to choose from, Docz, MKDocs, MDX, Gatsby to just name a few. We agreed that we need to do more research to make an educated decision regarding our doc toolchain.

There will be a Google Season of Docs project this year where a technical writer will help us to enhance the Volto docs further.

More Volto Features

Nicola added a feature to display the currently used Volto version in the Plone control panel, he added animations for page transitions and added an Italian translation for Volto. Together with Victor, he worked on a toast component for user notifications.

More Volto Features

Piero worked on improving and fixing the event type view and added styling for the listing tile.

Victor and Steffen worked on the image sidebar.

Rodrigo added a feature that allows the user to use SHIFT+RETURN to create a new line in a Volto text. By default Volto creates a new text tile on RETURN. Rodrigo also looked into the Add-ons control panel work that Eric Steele started some time ago.

Nilesh joined us remotely from India and continued his work on the users and groups control panel for Volto.

Steffen fixed a bug in the subjects search. Rodrigo and Thomas looked into our Cypress-based acceptance tests.

Fred looked into what consequences it would have for us to get rid of the description tile and how we could customize the domain in Plone to allow to send portal emails via the Volto frontend.

Stefania and Maurizio worked on a product definition of Volto and an elevator pitch for clients and users. Together with Paul, Fred and Timo, they had a longer discussion about how to position Volto and Plone 6 in the market.

Plone REST API

The Plone REST API builds the foundation for the new Volto frontend and Plone 6. It has been under active development in the last four years and seen more than 80 releases in that time period. The REST API is stable, battle-tested and production-ready and part of the Plone core since the release of Plone 5.2.

Thomas Buchberger and Lukas Graf led the REST API efforts during the sprint and worked with Carsten Senger, Roel Bruggink, Janina Hard and Timo Stollenwerk to further enhance the REST API, fix bugs, improve the documentation, and the error handling.

Plone REST API discussion in the garden

Plone REST API discussion in the garden

Querystring Endpoint for the Collection Type

One of the most important new features, that has been developed during the sprint, is the new querystring endpoint. This endpoint will allow us to implement the Collection type for the Volto frontend. Lukas implemented this essential feature with help from Rob, who took care of the frontend implementation in Volto.

New Features, Bugfixes, and first time contributions

Thomas added support for the retrieval of additional metadata fields in summaries in the same way as in search results. Lukas made the @types endpoint expandable and the @users endpoint easier to customize. Janina fixed setting the effective date on workflow transitions. This was her first contribution to the Plone core. On the same day she signed the contributor agreement, got her fix merged and released, which is quite an accomplishment for a single day. Way to go Janina!

In addition to that, the team fixed lots of smaller bugs (#780, #729, #764, #755, #777, #732, #738) during the sprint.

REST API Error handling

Carsten, Nathan, Thomas, Lukas and Timo had a longer discussion about error handling in the Plone REST API and about how we can improve things. We considered and discussed implementing RFC7807 and decided against adopting it.

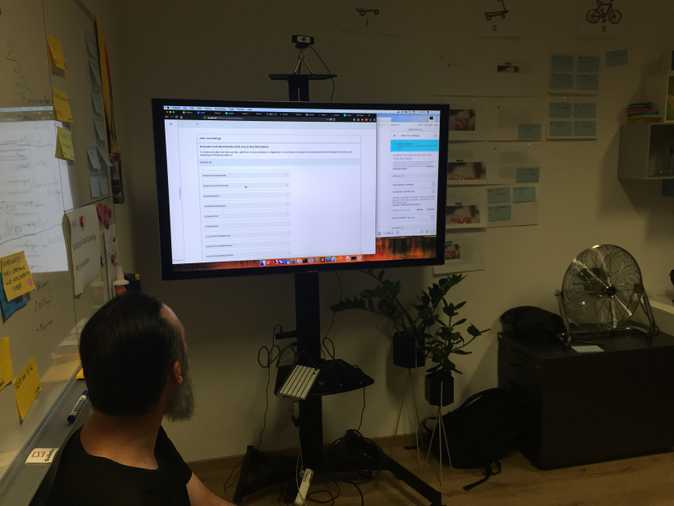

Sprint discussions…

Sprint discussions…

The RFC did not see much adoption and it only slightly differs from what we are already doing. A breaking change does not seem to be worth the effort. We decided to create a separate error reporting component unifying the error reporting and ensuring consistency. We also started to fix a few inconsistencies right at the sprint.

API Documentation

Guillotina is using Swagger to document its API. Nathan explained the approach he took for that and Carsten started to integrate Swagger to generate dynamic api docs in plone.restapi.

REST API Releases

Most of the work we did on Plone REST API during the sprint has been released in Plone REST API 4.1.4, 4.2.0, 4.3.0, and 4.4.0.

Dexterity Site Root

Roel Bruggink joined us on Saturday and Sunday to continue his work on turning the Plone site root into a Dexterity content object. He fixed some complex recursion and acquisition problem when migrating the site root to Dexterity. His Plone Improvement Proposal (PLIP #2454) has been accepted by the Plone framework team and it will build the foundation for a new Plone 6 branch that we plan to cut this year.

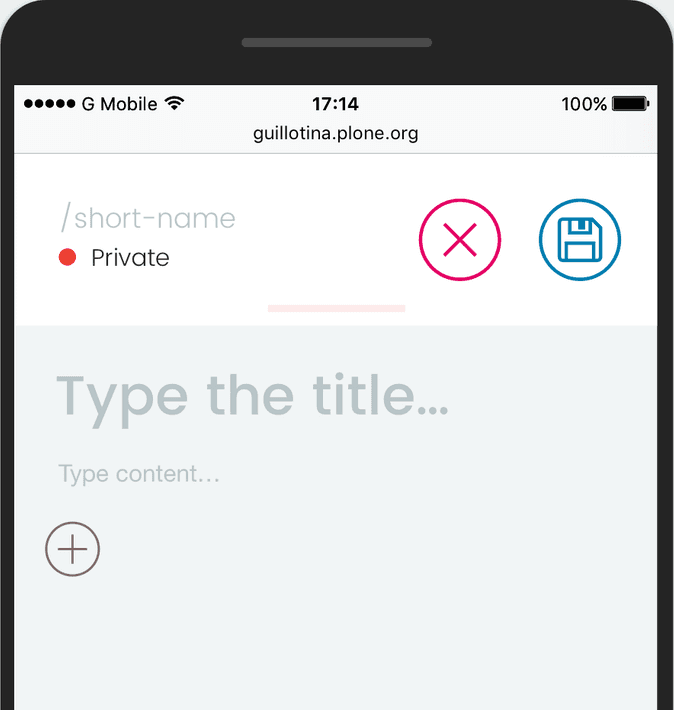

Guillotina

Guillotina is a new AsyncIO REST data API. It has been written from the scratch by Ramon Navarro Bosch and Nathan van Gheem. It can easily scale billions of objects via its REST API. With the CMS addon, it is compatible with the Plone REST API. Therefore you can run Volto on a Guillotina backend and Guillotina might be able to replace our existing Plone backend in the future.

The Guillotina master thinking hard. :)

The Guillotina master thinking hard. :)

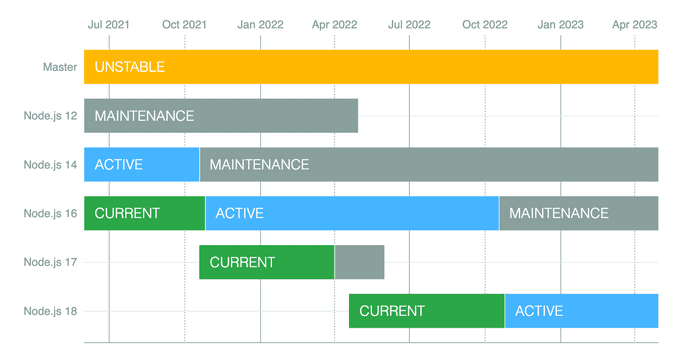

Nathan and Ramon worked on Guillotina 5. The Guillotina 5 release brought Python 3.7 support and the use of the context vars APIs. They refactored quite a bit, moved parts of addons into core(swagger, caching, pg catalog), implemented OOTB PostgreSQL catalog, added a Helm/Kubernetes/Docker configurations for the Guillotina CMS addon that can be configured to act as a Volto backend, worked on improving caching and filestorage.

Websockets for Plone

At the Plone Open Garden 2019, Asko Soukka started to work on bringing websocket support to Plone. Websockets allow bi-directional communication between the server and the browser. With websockets, the server can send messages and notifications to the browser. This can be used to implement notification systems or collaborative editing to just name a few possible applications.

Asko continued his work during the Beethoven sprint and created an example where comments that are added to Plone automatically pop up as toast notifications for other users. He published a Docker image with a Twisted-based ZServer and implemented the foundation to use that feature for gatsby-source-plone. This will allow us to create an extension that will allow editors to live-preview changes they do in Plone in a GatsbyJS site.

Karaoke, Barbecue and Garden Parties

A Plone sprint is much more than just programming. Collaborating, meeting old friends, talk, discuss and having a good time together are the ingredients that make a Plone sprint. We started our sprint on Thursday with a free React and Volto training for newbies. At the evening when everybody arrived, we went to a local Karaoke bar for a revival of the epic Karaoke sessions we had in Tokyo last year.

Karaoke at the “Nyx”

Karaoke at the “Nyx”

On Friday evening we ordered Pizza and hacked the night away at the office and our garden. On Saturday we went to the “Arithmeum”, a mathematics museum owned by the Research Institute for Discrete Mathematics at the University of Bonn.

Our tour at the “Arithmeum”

Our tour at the “Arithmeum”

Afterwards we had a garden party with a barbecue and we spend the afternoon sitting in the garden and the office and continued to drink and hack on Plone.

Barbecue in the garden of the kitconcept office

Barbecue in the garden of the kitconcept office

Summary

The Beethoven Sprint 2019 was a major success. We were able to start working on the main missing pieces for Volto. The new edit toolbar is in place and the foundation for the new tiles/metadata toolbar on the right side of the UI has been finished. We also implemented a new table tile.

We implemented a proof-of-concept for the new listing / collection tile and implemented the required querystring endpoint in Plone REST API. Both Volto and the Plone REST API got lots of enhancements and bugfixes.

Both the Plone REST API and Volto are stable and used in production. We will use the next month to further enhance Volto and implement the missing bits and pieces. Our plan is to present Volto 4 at the Plone conference in Ferrara at the end of this year and cut a Plone 6 branch afterwards that contains the Dexterity Site root, folderish content types and other enhancements that are needed make Volto the default frontend for Plone 6.

Guillotina and the Websockets support for ZServer together with Gatsby as another frontend for Plone are amazing projects by the smartest folks in our community. Our diversity is one of our main strength and we are looking forward what the future will bring!

We had a great time at the sprint. Thank you to everyone who attended! We thank the Plone and Python community for their ongoing support. The Plone Foundation, the Python Software Foundation, and the German Python Software Verband made this event possible with their sponsorship as well as our company sponsors Abstract, Starzel, Werkbank and Zest.

We are looking forward to see you all in Bonn next year!

The “Hofgarten” near the kitconcept office

The “Hofgarten” near the kitconcept office

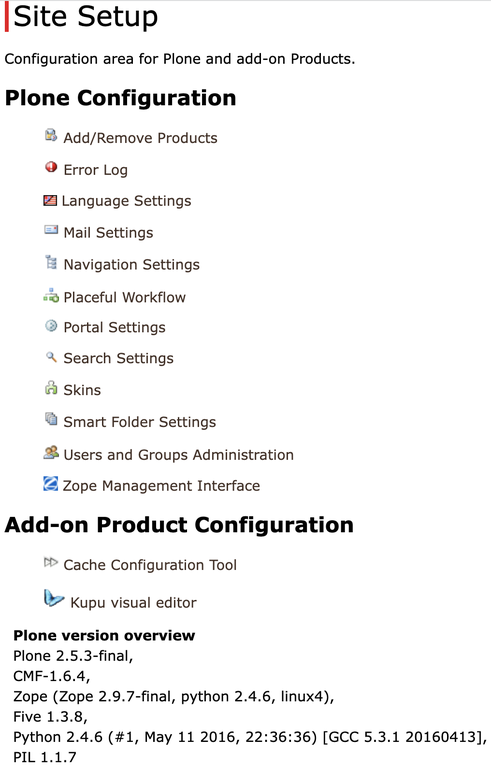

Screenshot of my website end of October 2021: still Plone 2.5

Screenshot of my website end of October 2021: still Plone 2.5

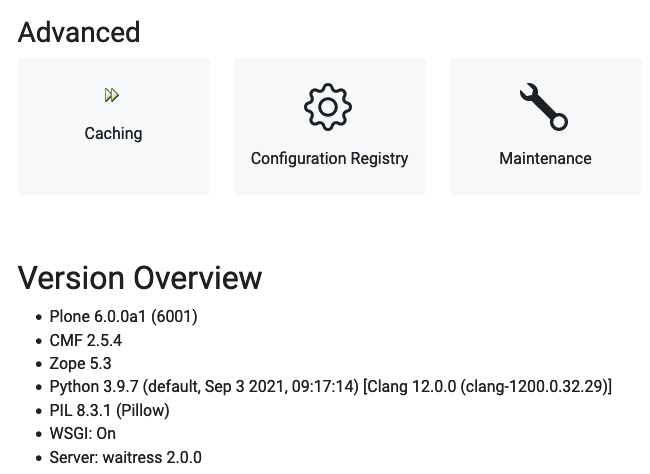

Screenshot of Site overview in new site.

Screenshot of Site overview in new site.